Editor’s note: This post was originally published in 2018 and has been updated since to ensure accuracy and to adhere to modern best practices.

When you’re an SEO managing a website for years and publishing content consistently, there comes a point where things can seem out of control. You’ve published so many test pages, thank you pages, and articles that you’re not even sure which URLs are relevant anymore.

With every Google algorithm update comes a traffic spike for websites that focus on quality over quantity. To join that elite list, you need to make sure every URL on your website that’s crawled by search engines serves a purpose and is valuable to the user. In fact, if you have too many low-quality content pages on your site, Google may not even crawl every page on your site!

By allowing your website to grow in unnecessary size, you’re potentially leaving rankings on the table and wasting valuable crawl budget. Knowing every URL Google has in its index allows you to flag any potential technical errors on your website and clean up any low-quality pages — all to keep your website quality high.

In this article, we’re covering how to identify index bloat by finding every URL indexed by Google and how to fix these issues to save your crawl budget.

What We’re Covering

Note: We can help you spot and fix issues on your website that are harming your overall ranking. Contact us here.

What is Index Bloat?

Index bloat is when your website has dozens, hundreds, or thousands of low-quality pages indexed by Google that don’t serve a purpose to potential visitors. This causes search crawlers to spend too much time crawling through unnecessary pages on your site instead of focusing their efforts on pages that help your business. It also creates a poor user experience for your website visitors.

Index bloat is common on eCommerce sites with a large number of products, categories, and customer reviews. Technical issues can cause the site to be inundated with low-quality pages that are picked up by search engines.

In short, index bloat will slow down your site and waste crawl budget on your site. Keeping a clean website means search engines will only index the URLs you want visitors to find.

Index Bloat in Action: An Example

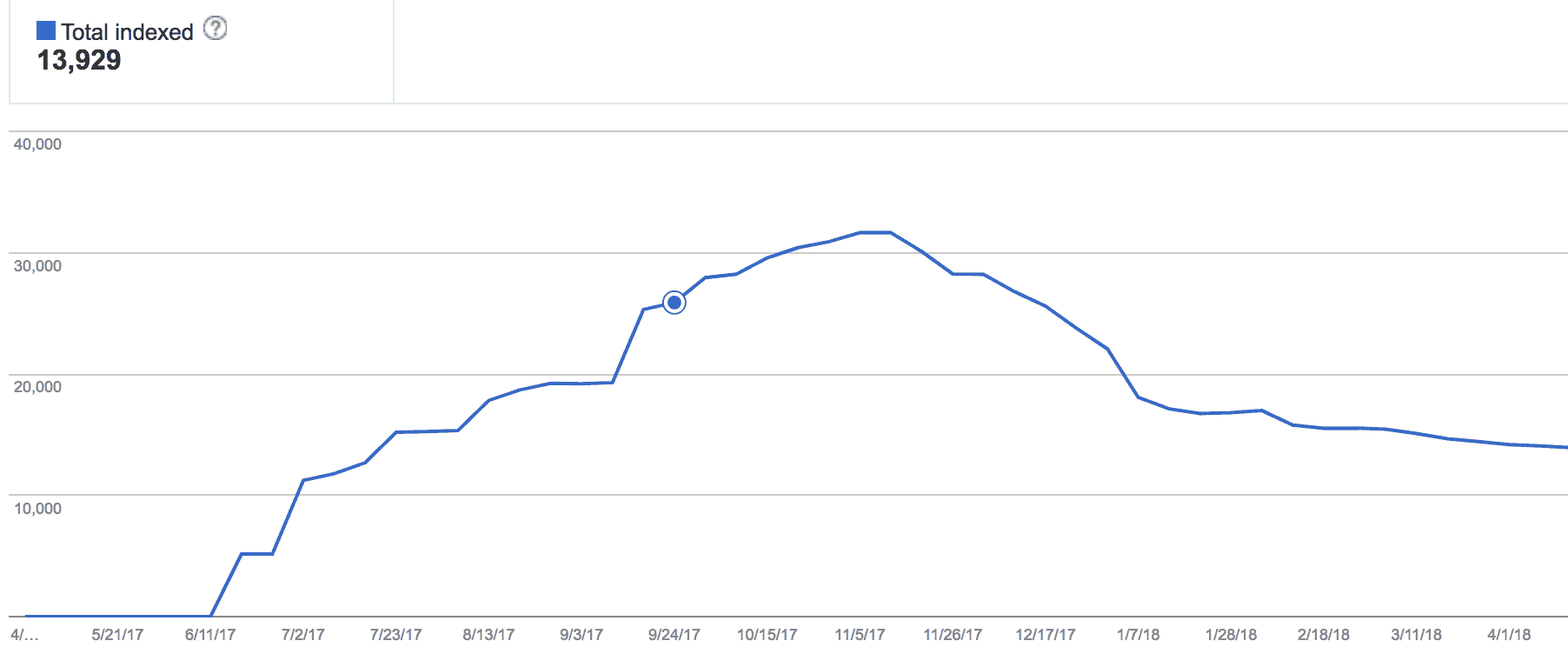

An eCommerce site that we worked with a few years ago was expected to have around 10,000 pages. But, when we looked in Google Search Console (GSC), we saw — to our surprise — that Google had indexed 38,000 pages for the website. That was way too high for the size of the site.

(Hint: You can find these numbers for your site at “Search Console” > “Coverage” > “Valid.”)

That number had risen dramatically in a short period. Originally, the site had only 16,000 pages indexed in Google Analytics. What was happening?

A problem in the site’s software was creating thousands of unnecessary product pages. At a high level, any time the website sold out of their inventory for a brand (which happened often), the site’s pagination system created hundreds of new pages. In turn, the glitch caused website indexation to increase drastically — and SEO performance to suffer.

Identifying Index Bloat

If you start noticing a sharp increase in the number of indexed pages on your site, it may be a sign that you’re dealing with an index bloat issue.

Even if the overall number of pages on your site isn’t going up, you might still be carrying unnecessary pages from months or years ago. These pages could be slowly chipping away at your relevancy scores as Google makes changes to its algorithm.

If you have too many low-quality pages in the index, Google could decide to ignore important pages on your site and instead waste time crawling these less-important other parts of your site, including:

- Archive pages

- Tag pages (on WordPress)

- Search results pages (mostly on eCommerce websites)

- Old press releases/event pages

- Demo/boilerplate content pages

- Thin landing pages (<50 words)

- Pages with a query string in the URL (tracking URLs)

- Images as pages (Yoast bug)

- Auto-generated user profiles

- Custom Post types

- Case study pages

- Thank You pages

- Individual testimonial pages

It’s a good idea to audit these pages periodically to ensure no index bloat issues are occurring or silently developing (more on that below).

How to Find All Indexed Pages on Your Website

When your site is crowded with URLs, identifying which ones are indexed can seem like finding the proverbial needle in the haystack. Start by estimating the total number of indexed pages: Add the number of products you carry, the number of categories on your site, any blog posts and support pages.

You should also take a more granular approach to finding all the pages on your website. Here are our suggestions:

- Create a URL list from your sitemap: Ideally, every URL you want to be indexed will be in your XML sitemap. This is your starting point for creating a valid list of URLs for your website. Use this tool to create a list of URLs from your sitemap URL.

- Download your published URLs from your CMS: If you use a WordPress CMS, consider trying a plugin like Export All URLs. Use this to download a CSV file of all published pages on your website.

- Run a Site Search query: Run a search query for your website (site:website.com) and make sure to replace website.com with your actual domain name. The results page will give you an estimated number of URLs in Google’s index. There are tools available that can help scrape a URL list from search.

- Look at your Index Coverage Report in Google Search Console: This tool tells you how many valid pages are indexed by Google. Download the report as a CSV.

- Analyze your Log Files: Log files tell you which pages on your website are most visited, including those you didn’t know users or search engines were viewing. Access your log files directly from your hosting provider backend, or contact them and ask for the files. Conduct a log file analysis to reveal underperforming pages.

- Use Google Analytics: You’ll want a list of URLs that drove pageviews in the last year. Go to “Behavior → Site Content → All pages.” In “Show rows,” you want to see as many rows as URLs that you have. Export as a CSV.

Once you’ve consolidated all of your collected URLs and removed duplicates and URLs with parameters, you’ll have your final list of URLs. Use a site crawling tool like Screaming Frog, connect it with Google Analytics, GSC, and Ahrefs, and start pulling traffic data, click data, and backlink data to analyze your website.

You can use the data to see which URLs on your website are underperforming — and, therefore, don’t deserve a spot on your site.

How to Decide Which Pages to Delete or Remove

Deleting content from your site may seem contrary to all SEO rules. For years, you’ve been told that adding fresh content on your site increases traffic and improves SEO.

But when you have too many pages on your website that don’t add value to your users, some of those pages are probably doing more harm than good.

Once you’ve identified those low-performing pages, you have four options:

- Keep the page “as-is” by adding internal linking and finding the right place for it on your website.

- Create a plan to optimize the page.

- Leave it unchanged because it’s specific to a campaign — but add a noindex tag.

- Delete the page but set up a 301 redirect to it.

In most cases, the easiest and most efficient choice is the last one. If a blog post has been on your website for years, has no backlinks pointing to it, and no one ever visits it, your time is better spent removing that outdated content. That way, you can focus your energy on fresh content that’s optimized from the start, giving you a better shot at SEO success.

If you’re trying to decide which of the four paths above to take, we’ve got a few suggestions:

1. Use our free tool to find underperforming pages — and then delete them.

The Cruft Finder tool is a free tool we created to identify poor-performing pages. It’s designed to help eCommerce site managers find and remove thin content pages that are harming their SEO performance.

The tool sends a Google query about your domain and — using a recipe of site quality parameters — returns page content it suspects might be harming your index ranking.

After you use the tool, mark any page that is identified by the Cruft Finder tool and gets very little traffic (as seen in Google Analytics). Consider removing those pages from your site.

2. Update any necessary pages getting little traffic.

If a URL has valuable content you want people to see — but it’s not getting any traffic — it’s time to restructure.

Ask yourself:

- Is it possible to consolidate pages with another page on a similar topic?

- Can the page be optimized to better focus on the topic?

- Could you promote the content better through internal links?

- Could you change your navigation to push traffic to that particular page?

Make sure that all your static pages have robust, unique content. If Google’s index includes thousands of pages on your site with sparse or similar content, it can lower your relevancy score.

3. Prevent internal search results pages from being indexed.

Not all pages on your site should be indexed. The main example of this is the search results pages. You almost never want search pages to be indexed; there are better pages to funnel traffic that have better quality content. Internal search results pages are not meant to be entry pages.

For example, using the Cruft Finder tool for one major retail site, we discovered they had more than 5,000 search pages indexed by Google!

If you find this issue on your own site, follow Google’s instructions to get rid of search result pages, but do so carefully. Pay attention to details about temporary versus permanent solutions, when to delete pages and when to use a noindex tag, and more.

If this gets too far into technical SEO for you, reach out to our SEO team for consultation or advice.

How to Remove URLs from Google’s Index

You’ve done the hard part: You’ve identified which pages you want removed from Google’s index. Now, to make it happen.

There are five simple ways to noindex pages on your website. (If your eCommerce website has a lot of zombie pages on it, see our in-depth SEO guide for fixing thin and duplicate content.)

1. Use noindex in the meta robot tag.

We want to definitively tell search engines what to do with a page whenever possible. The noindex tag tells search engines like Google whether or not they should index the page.

This tag is better than blocking pages with robots.txt, and it’s easy to implement. Automation is also available for most CMS.

Add this tag at the top of the page’s HTML code:

<META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”>

“Noindex, Follow” means search engines should not index the page, but they can crawl/follow any links on that page.

2. Set up the proper HTTP status code (2xx, 3xx, 4xx).

If old pages with thin content exist, remove and redirect them (through a 301 redirect) to relevant content on the site. This will maximize your site authority if any old pages had backlinks pointing to them. It also helps to reduce 404s (if they exist) by redirecting removed pages to current, relevant pages on the site.

Set the HTTP status code to “410” if content is no longer needed or not relevant to the website’s existing pages. A 404 status code is also okay, but a 410 is faster to get a site out of a search engine’s index.

3. Set up proper canonical tags.

Adding a canonical tag in the header tells search engines which version they should index.

You should ensure that product variants (mostly set up using query strings or URL parameters) have a canonical tag pointing to the preferred product page. This will usually be the main product page, without query strings or parameters in the URL that filter to the different product variants.

4. Update the robots.txt file to “disallow.”

The robots.txt file tells search engines what pages they should and shouldn’t crawl. Adding the “disallow” directive within the file will stop Google from crawling zombie/thin pages, but still keep those pages in Google’s index.

The important technical SEO lesson here is that blocking is different from noindexing. In our experience, most websites end up blocking pages from robots.txt, which is not the right way to fix the index bloat issue. Blocking these pages with a robots.txt file won’t remove these pages from Google’s index if the page is already in the index or if there are internal links from other pages on the website.

When pages are already indexed and might have internal links from other pages of the site, you can remove those internal links completely when the destination page is set to “Disallow.”

If your goal is to prevent your site from being indexed, add the “noindex” tag to your site’s header instead.

5. Use the URL Removals Tool in Google Search Console.

Adding the “noindex” directive might not be a quick fix and Google might keep indexing the pages, which is why the URL Removals Tool can be handy at times. That said, use this method as a temporary solution. When you use it, pages are removed from Google’s index quickly (usually within a few hours depending on the number of requests).

The Removals Tools is best if used together with noindex directive. Remember that removals you make are reversible in the future.

What Kind of Results Can You See by Fixing Index Bloat?

Why It’s Important to Fix Index Bloat: Results That Can Follow

We’ll say it again: You only want search engines to focus on the important URLs on your website. Getting rid of those that are low-quality will make that easier.

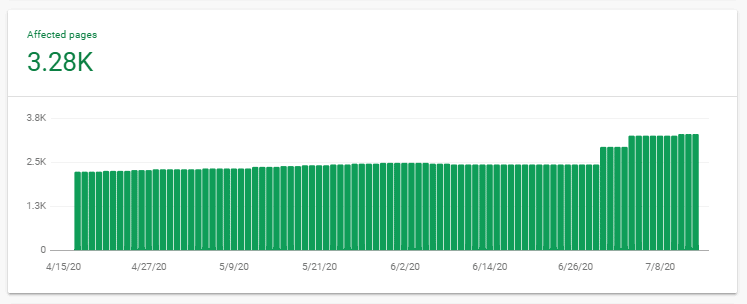

Here’s a graph of indexed pages from a client whose internal search result pages were being indexed. We helped them implement a technical fix to keep it from happening.

In the graph, the blue dot is when the fix was implemented. The number of indexed pages continued to rise for a bit, then dropped significantly.

Year over year, here’s what happened to the site’s organic traffic and revenue:

3 Months Before the Technical Fix

- 6% decrease in organic traffic

- 5% increase in organic revenue

3 Months After the Technical Fix

- 22% increase in organic traffic

- 7% increase in organic revenue

Before vs. After

- 28% total difference in organic traffic

- 2% total increase in organic revenue

This process takes time. For this client, it took three full months before the number of indexed pages returned to mid-13,000, where it should have been all along.

Conclusion

Your website needs to be a useful resource for search visitors. If you’ve been in business for a long time, you should perform site maintenance every year. Analyze your pages frequently, make sure they’re still relevant, and confirm Google isn’t indexing pages that you want hidden.

Knowing all of the pages indexed on your site can help you discover new opportunities to rank higher without needing to always publish new content. Regularly maintaining and updating a website is the best way to stay ahead of algorithm updates and keep growing your rankings.

Note: Interested in a personalized strategy for your business to reduce index bloat and raise your SEO ranking? We can help. Request a free proposal here.