So recently I took a look over Mrs Beckham’s new eCommerce site as it was in fact, one of the most anticipated website launches this year, to see how well it had been optimised from a SEO perspective. I did promise a part 2 to this evaluation; so here it is.

Quite often when people think ‘SEO’ (search engine optimisation) their minds are flooded with thoughts of linkbuilding, content and rankings, so much so that they forget a very important metric; the website itself. It’s time to get technical…

Stay tuned, don’t switch off!

I’ve performed a very mini SEO technical audit of Mrs VB’s site. This isn’t going to become a web dev assessment of the site full of coded jargon, but it is going to give you some great tips on making sure your site is indexed by search engines and therefore, has the potential to rank. Think of it as a guide for “How to check if your site is search engine friendly.

Is Your Site Indexed By Search Engines

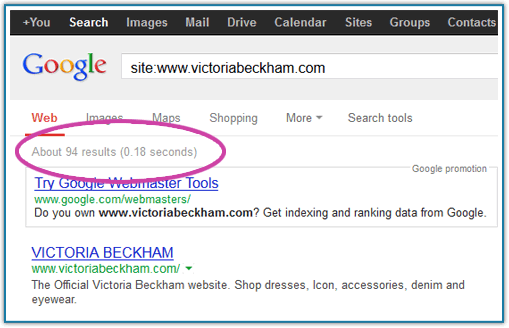

The first thing you should check post launch, once your site has been live for at least a week, is whether your site has been indexed by search engines. This means identifying whether search engine spiders have been able to crawl the pages of your site and are able to register the number of pages on your site. There is a very quick and simple way of testing this:

- Open your preferred internet browser

- Enter the URL of a search engine e.g: www.google.co.uk

- Where you would usually enter your search term, enter the following:

- Site:www.YourURL

- E.g: site:www.victoriabeckham.com

- The search engine will then return how many pages it has indexed. In Victoria Beckham’s case, the www.URL returns 94 results in Google UK

- Repeat this test with the non-www version of your URL e.g: site:victoriabeckham.com

- Is there a considerable difference in the results? For example, the non-www URL of Victoria Beckham’s site returns 93 pages in the site: results VS the 84 pages that were returned for the www version. If ‘YES’ then your site may have some canonicalization issues and you may need to do some further investigating (more on canonicalization later).

- Repeat this test across alternate search engines such as ‘Bing’ and ‘Yahoo!’

Common Causes For Pages Not Being Indexed By Search Engines

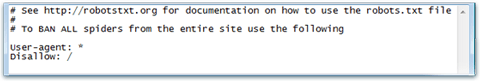

Robots.txt Files

- Robots.txt files left coded into pages on the site block search engines from crawling the content on the pages of your site

- You can test if this code has been left on your site in error by entering your website’s URL into your internet browser followed by /robots.txt – the code you see should look as follows:

- It is absolutely fine to have robots.txt files existing on your site as long as they are only blocking content you want hidden from search engine spiders. This is a common approach with PPC Landing Pages as the content may be duplicated from elsewhere on the site, or may just be great for PPC conversion rate optimisation but not so ‘SEO Friendly’

Sitemap Not Submitted To Search Engine

- It is SEO best practice to submit your sitemap to Google Webmaster Tools and if possible, also Bing Webmaster Tools so that the search engines can index the correct number of pages on your site

- The perks of registering for a Google Webmaster Tools account is that Webmaster Tools gives you access to private data from Google which can help your search performance and notify you if the search engine spiders have experienced any errors when crawling your site

No Content

Which leads on nicely to my next point…

Is Your Site’s Content Being Crawled?

It is REALLY important for search engine spiders to be able to crawl the content on your site. This is how search engines identify what your website is about and determine which pages should rank for relevant user search terms. These are NOT scary spiders that you want to shoo away; you want to welcome these search engine friendly spiders to crawl all over your site constantly.

If your content cannot be crawled, your site will NOT EVER RANK in search engine results. You can check if your content is crawlable by performing the following test:

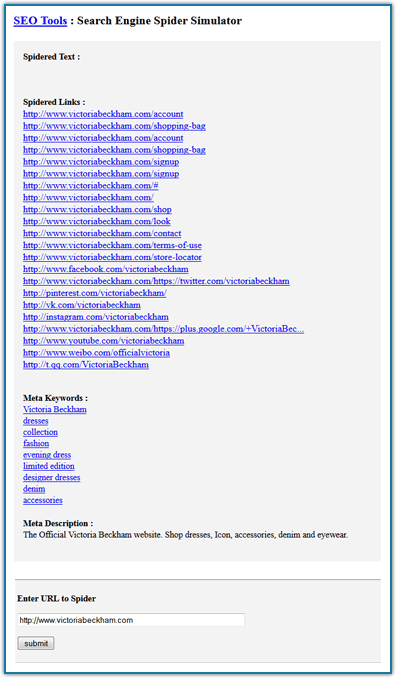

- Enter the following URL in your internet browser: http://www.webconfs.com/search-engine-spider-simulator.php

- Copy the URL of the page on your site you would like to test

- See if the report returns the content written on the page whether this is an introductory paragraph or a product description

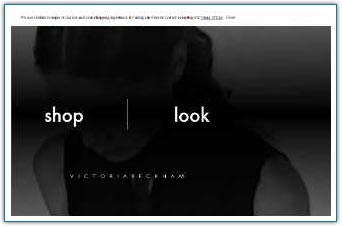

As you can see, Victoria Beckham has no content on her homepage at all and so whilst the report shows that the Meta Description can be crawled and indexed (which is very, very important) there is no other content on the homepage to support this and as there are duplicated Title Tags and Meta across the site as mentioned in Part 1, it is difficult for search engines to determine which pages should be ranking above others for specific user search queries.

Common Causes For Site Content Not Being Crawled

There’s Nothing There

- If your site has absolutely no content on it at all (and trust me, we’ve come across plenty of eCommerce sites with no content) then there is nothing for search engines to crawl.

But you might think, Aha! Wait…my navigation panel is content! Well, this is true so long as it is all text based and not an image without any text at all. Although text link navigation will give the search engine spiders something to crawl, it is best practice to have at least 100 characters of content in addition to your navigation on each page of your site.

Illegal Coding

- Check the coding of the pages on your site and verify the code validates. If there are illegal characters within the coding of the pages on your site, search engine spiders will not be able to crawl the content

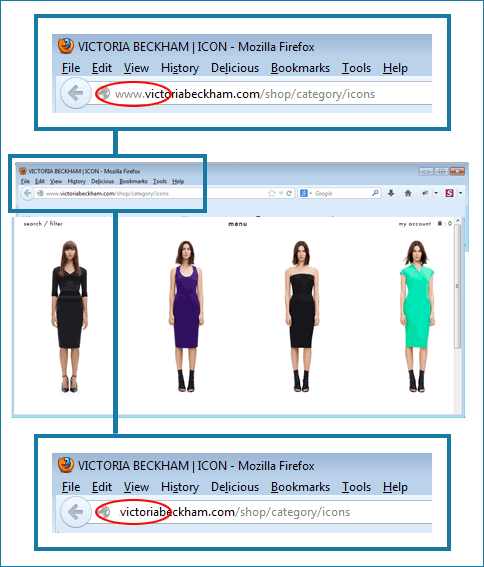

Canonicalization Issues

Okay, I know I promised no jargon but that’s about as much jargon as we are going to get. Firstly, what a horrible, horrible word to have to spell let alone say! (Whinge over).

Fear not, ‘canonicalization’ isn’t as scary as it sounds in terms of understanding what it means but it is a very important issue to address if your website is guilty of it. It basically means that the www and non-www versions of your site are not resolving into one version. This means that Google (and other search engines) are indexing multiple versions of your site.

Multiple versions of your site are BAD, BAD NEWS for RANKINGS. Since the launch of the Google Panda update, Google now penalise websites that appear to feature ‘duplicate content.’ So if any or even worse, every page of your site has an individual www and non-www static URL, it is basically alerting Google to the fact that your site has been duplicated which will result in your site dropping further down the rankings and possibly dropping out completely. This loses you traffic which in turn loses you sales. OUCH!

Common Remedies For Canonicalization

Configure Your .htaccess File

- Configuring your .htaccess file so that all of the non-www URLs resolve to the www URLs on your site is the best option to rectify this issue but will require the assistance of a web developer. All remedies for this issue will most likely require a web developer

Implement A ‘robots.txt’ File

- If your site is built on a managed platform where you do not own the hosting for it e.g. Free web hosting and design platforms, then it is unlikely that you will be able to perform the above. You may however, be able to request that a ‘robots.txt’ file be coded into all of the non-www URLs on your site as this will block them from being indexed by search engines and duplicate content will no longer be an issue for your site

- If your site is managed on a ‘Magento’ platform, you will want to code in the rel=”canonical” tag instead to the URLs you DO want to rank in search engines. As Alex explained in her blog post on the Google Penguin Update 4/2.0 this will notify search engines that you are aware of duplicate content issues on your site but the pages with these tags are the most important and valuable to the user and should be indexed in search engine results

Page Load Speed Time

The amount of time it takes for your website’s pages to load is very important. Google loves a ‘good user experience’ and their lovable ‘Panda’ loves good user engagement. A slow page load speed is going to cause users to bounce off your site. This means you lose traffic and again, potential sales.

Accruing a high bounce rate is also BAD NEWS for RANKINGS. If people are bouncing off your site it’s because you weren’t what they were looking for (in Google’s eyes) or they’re just not engaging on your site. Think about how you are as a customer. You use the internet because it’s quick, convenient and efficient. If you land on a page that takes even close to a minute to load – well you won’t even realise it has taken that long to load because you won’t have stuck around that long to find out.

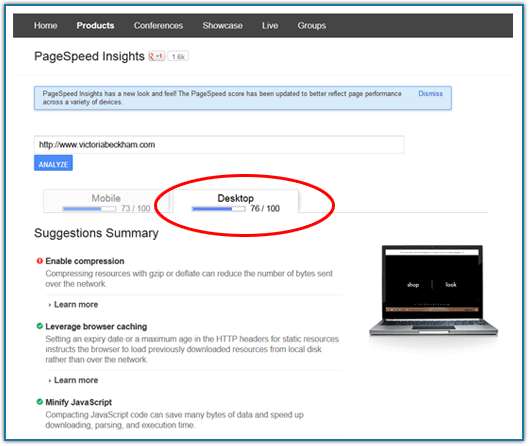

Whilst there are tools such as the Google Page Load Speed Test that can help you to determine what your current Google page load speed score is overall, there is nothing quite as accurate as cross browser, and cross device testing. I shall explain why…

Although Victoria Beckham’s site scores what would appear to be a relatively respectable 76/100 page load speed score, it isn’t 99 or 100. Now this isn’t me being harsh, this is me acknowledging just how great a leap it will still take Mrs Beckham’s site to score highly.

Let me explain:

- Google Chrome – The site’s homepage loaded relatively quickly (it took less than a minute but could be faster)

- IE9 & Mozilla Firefox – I waited 10 minutes for the site’s homepage to fully load (that means with the video flash background appearing on the page)

Fortunately, the site has been designed as such to not require the background to enhance the user’s journey. But if it is going to bring the page load speed of your site down and most people aren’t going to have even realised that video background existed; what’s the point in having it?

Improve Your Page Load Speed Time

Ways you can improve your page load speed time are as follows:

- Check the Current Speed of the Website

- Optimise Your Images

- Compress and Optimise Your Content

- Put Stylesheet References at the Top such as

<Head> - Put Java Script References at the Bottom of your code

- Cache Your Web Pages

If you missed my recent Wifi Workshop where I explored the saints and sinners of the online fashion industry and are looking for tips on how to increase your fashion eCommerce revenue, you can view my presentation on Slideshare.net: ‘7 Deadly Sins of Fashion eCommerce’

Follow my blog for more fashion eCommerce insights.